The aiComputerUse command integrates mouse and keyboard control of Anthropic Claude Computer Use into our Open-Source RPA software. This makes the Ui.Vision browser extension an easy way to demo and use this technology on any Mac, Linux or Windows machine.

If you are new to Ui.Vision and did not install it yet, get started with this blog post: How to Run Anthropic Computer Use in Your Web Browser.

The aiComputerUse command is available with V9.3.8. (Soon available:) In addition, a good way to get started with Computer Use prompting is to experiment with the Computer Use "Chat" feature in the Ui.Vision side bar.

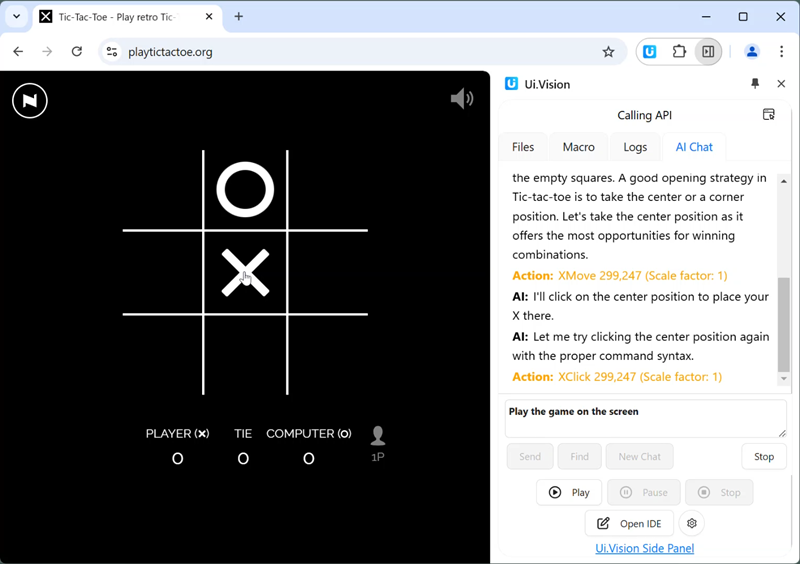

Ui.Vision plays TicTacToe with the help of Anthropic Computer Use.

The syntax is easy: aiComputerUse | prompt | var1. The last response of Claude is stored in the variable. See also the other AI commands.

At the bottom of the AI settings page, you'll find the setting aiComputerUse: Max loops before stopping: 20. This setting limits the number of question/reply pairs between UI.Vision ([u] in the log) and the AI agent ([a] in the log). This limit exists because sometimes the AI agent may persist indefinitely, going deeper and deeper down a rabbit hole. An unlimited number of API calls would quickly drain your API credits. While the default value of 20 is relatively low, you can increase it as needed depending on the tasks you want to automate.

Computer Use Demo 2: The aiComputerUse prompt is "Fill web form with artificial data".

The side panel has a AI tab. Here you can chat interactively with the Anthropic computer use API. You can use this feature e. g. to develop a good prompt for the aiComputerUse command.

The FIND button in the chat window is for debugging. It marks the most recent (last) mentioned x/y position from the chat with a red "X".

AI chat tab in the browser side panel

AI chat tab in the browser side panel

Here are some insights we gained from using Computer Use (CU):

- CU was trained to move the mouse before clicking. This is often unncessary. Tell it "No mouse movements, only clicks". This saves time.

- Keep the screen (for desktop automation) or browser viewport (for browser automation) as small as possible. The smaller the screenshot area, the faster and cheaper the API response. Smaller screen sizes also improve the accuracy of the returned x,y values.

- Shorter prompter work often better than longer prompts. If you ask Claude for prompting help, it will write you long and often much too detailed prompts.

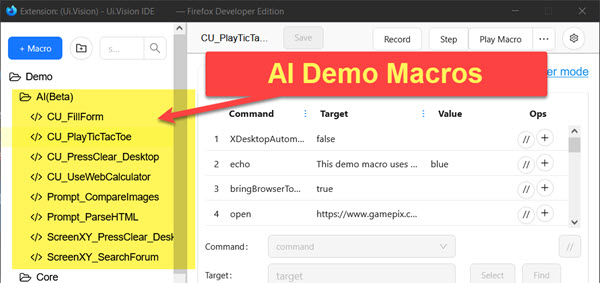

Prompts can be as easy as "Fill out this form with random data" or "Search for a flight". To get started, see the new Computer Use demo macros for example prompts that work. Or search our user forum for the tag "prompting".

- In general, we find that - at least for now - Computer Use works better for browser automation than desktop automation. Google's Project Jarvis team seems to have realized this as well.

The aiScreenXY command is optimized for finding items in screenshots. Compared to aiComputerUse, this is a very narrow task. But unlike aiComputerUse the output of aiScreenXY is very predictable. Plus, aiScreenXY autotomatically stores x,y values in the ${!ai1} and ${!ai2} variables for further use with XClick and XMove.

Last but not least, the cost for using aiPrompt and aiScreenXY is significantly lower than for aiComputerUse. A single "aiScreenXY | Find the blue button" command uses roughly around 1 cent in Anthropic API credits. By contrast, the "aiComputerUse | Play this TicTacToe game | s" command (in the CU_PlayTicTacToe demo macro) will use up Anthropic API tokens worth ~US$0.30. The exact cost depends how long the game goes and the screenshot size (= size of the browser viewport). For Computer Use automation, we highly recommend to use the smallest screenshot/browser area possible when automating tasks. Not only do smaller screenshots upload faster, smaller screenshots also keep Claude's response time shorter and the API usage cost much lower.

Test Computer Use and other AI commands with the demo macros.

Test Computer Use and other AI commands with the demo macros.

...then please contact us.